Neural Networks

Neural networks are computer systems that are designed to mimic the behaviour of biological neurons. They are composed of interconnected nodes, each of which performs a simple calculation to determine the output. These networks can learn and adjust their behaviour based on their input data, making them powerful tools for solving complex problems. Neural networks are used in a variety of fields, such as computer vision, natural language processing, and robotics. There are several types of NNs: feed-forward back-propagation neural networks (FFBP-NNs), recurrent neural networks (RNNs), and radial-basis-function networks (RBFNs), among other types.

Feed-forward back-propagation neural networks

Feed-forward back-propagation neural networks are the most used type of neural networks. In this type of network, the inputs are fed into the network in a forward direction, and the output and their errors are fed back in a backward direction to adjust the weights of the connections between neurons. This process is done repeatedly to continuously improve the accuracy of the network. This type of network is used for a wide range of applications, such as image classification, speech recognition, and medical diagnosis.

Recurrent Neural networks

Recurrent Neural Networks (RNNs) are specialized neural networks designed to model sequences of data or capture temporal dependencies in data. This type of network is useful in applications such as natural language processing, machine translation, and speech recognition. RNNs are capable of learning and remembering information over long intervals of time and can be used to model dynamic systems.

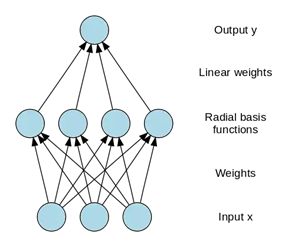

Radial-basis-function networks

A radial-basis-function network (RBF) is a type of neural network commonly used in pattern recognition and classification. In this type of network, each neuron functions as a nonlinear mapping of the input space, with a radial basis function as its activation function. This type of network is often used in applications involving function approximation, prediction, classification, and clustering.

Some advantages of neural networks:

- The ability to learn from data, without being explicitly programmed.

- The capacity to generalize and make predictions about new, previously unseen data.

- The ability to learn complex nonlinear relationships between inputs and outputs.

- The capability of working with noisy or incomplete data.

- NNs can work for both linear and non-linear functions.

- NNs can deal with large datasets.

Some disadvantages of neural networks:

- NNs are difficult to interpret because they do not provide or explanations of the relationships between predictors and the outcome.

- There is no variable selection mechanism, and so variables should be manually selected before training.

- Heavy computational requirements are needed when NNs calculate many weights for many variables; however, if the dataset is small, NNs cannot be used.

- The implementation is complicated and difficult for managers to interpret.